Ubuntu Distribution Upgrade: Not enough free disk space

Recently, we tried to upgrade an Ubuntu 20.04 desktop to a 22.04. At some point in the update, we got the following error:

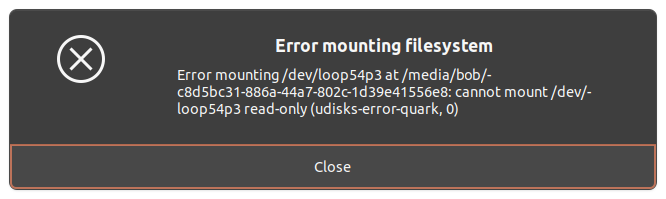

The upgrade has aborted. The upgrade needs a total of 10,6 G free space on disk '/'. Please free at least an additional 8201 M of disk space on '/'. Empty your trash and remove temporary packages of former installations using 'sudo apt-get clean'. The upgrade has aborted. The upgrade needs a total of 430 M free space on disk '/boot'. Please free at least an additional 38,4 M of disk space on '/boot'. You can remove old kernels using 'sudo apt autoremove' and you could also set COMPRESS=xz in /etc/initramfs-tools/initramfs.conf to reduce the size of your initramfs.

First of all, we tried to use the command apt autoremove to clear up some space, which unfortunately was not enough.

sudo apt autoremove;

Then to clear up some space, we needed to find remnant memories of older versions of the Kernel. To do so, we used the following command. The following command finds the current version of the kernel and shows the user the remaining packages that do not reflect the active kernel.

dpkg -l 'linux-*' | sed '/^ii/!d;/'"$(uname -r | sed "s/\(.*\)-\([^0-9]\+\)/\1/")"'/d;s/^[^ ]* [^ ]* \([^ ]*\).*/\1/;/[0-9]/!d;/^linux-\(headers\|image\)/!d';

Then we removed all the headers and the images that we did not need using the command apt-get purge.

$ dpkg -l 'linux-*' | sed '/^ii/!d;/'"$(uname -r | sed "s/\(.*\)-\([^0-9]\+\)/\1/")"'/d;s/^[^ ]* [^ ]* \([^ ]*\).*/\1/;/[0-9]/!d;/^linux-\(headers\|image\)/!d' linux-headers-5.13.0-52-generic linux-headers-5.15.0-46-generic linux-headers-generic-hwe-20.04 linux-image-5.15.0-46-generic linux-image-generic-hwe-20.04 $ sudo apt-get -y purge linux-headers-5.13.0-52-generic linux-headers-5.15.0-46-generic linux-image-5.15.0-46-generic;

Doing so was enough to clear up the space that was needed for the upgrade to continue.