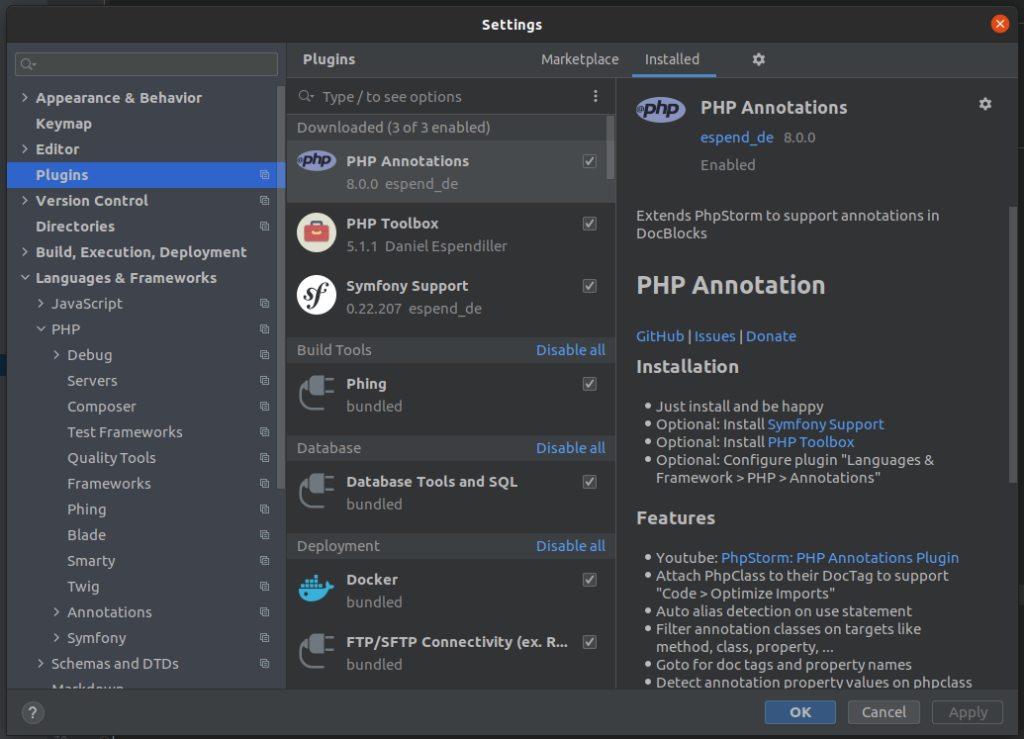

Google Hash Code 2022 – Practice Problem 1

One Pizza

Problem

You are opening a small pizzeria. In fact, your pizzeria is so small that you decided to offer only one type of pizza. Now you need to decide what ingredients to include (peppers? tomatoes? both?).

Everyone has their pizza preferences. Each of your potential clients has some ingredients they like and maybe some ingredients they dislike. Each client will come to your pizzeria if both conditions are true:

- all the ingredients they like are on the pizza, and

- none of the ingredients they dislike are on the pizza

Each client is OK with additional ingredients they neither like nor dislike being present on the pizza. Your task is to choose which ingredients to put on your only pizza type to maximize the number of clients that will visit your pizzeria.

Input

- The first line contains one integer 1≤C≤105 the number of potential clients.

- The following 2×C lines describe the clients’ preferences in the following format:

- First line contains integer 1≤L≤5, followed by L names of ingredients a client likes, delimited by spaces.

- Second line contains integer 0≤D≤5, followed by D names of ingredients a client dislikes, delimited by spaces.

Each ingredient name consists of between 1 and 15 ASCII characters. Each character is one of the lowercase letters (a-z) or a digit (0-9).

Input Data

Submission

The submission should consist of one line consisting of a single number 0≤N followed by a list of N ingredients to put on the only pizza available in the pizzeria, separated by spaces. The list of ingredients should contain only the ingredients mentioned by at least one client, without duplicates.

Scoring

A solution scores one point for each client that will come to your pizzeria. A client will come to your pizzeria if all the ingredients they like are on the pizza and none of the ingredients they dislike are on the pizza.

Sample

Sample Input

3 2 cheese peppers 0 1 basil 1 pineapple 2 mushrooms tomatoes 1 basil

Sample Output

4 cheese mushrooms tomatoes peppers

In the Sample Input, there are 3 potential clients:

- The first client likes 2 ingredients,

cheeseandpeppers, and does not dislike anything. - The second client likes only

basiland dislikes onlypineapple. - The third client likes

mushroomsandtomatoesand dislikes onlybasil

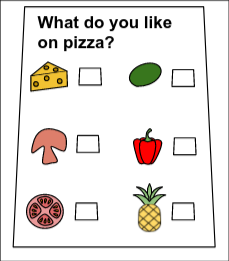

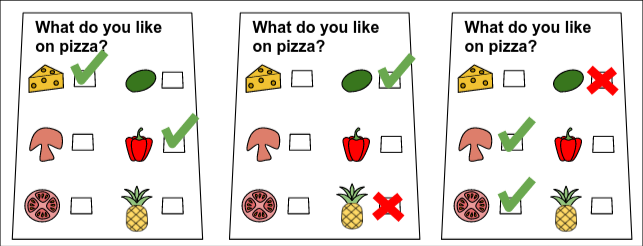

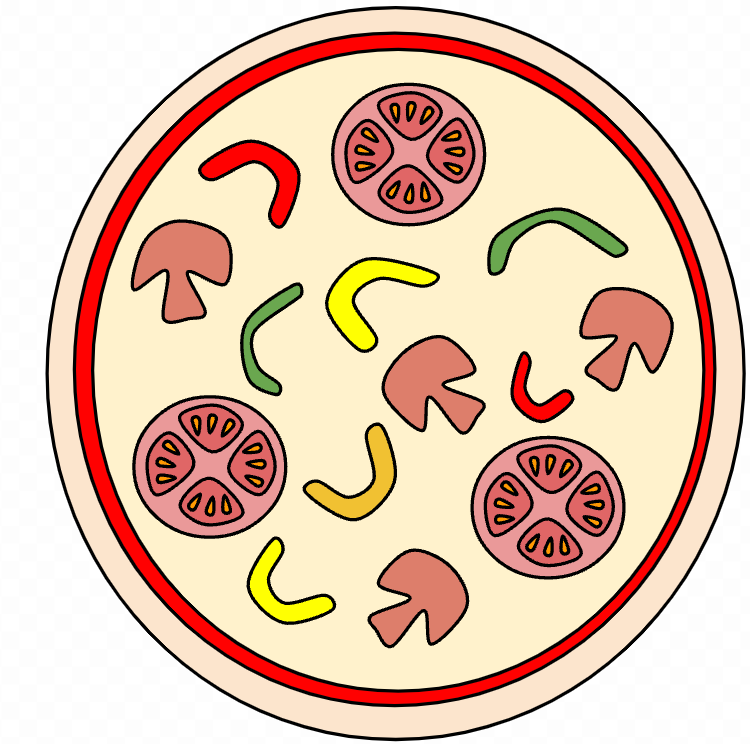

The picture below shows the preferences of 3 potential clients.

In this particular Sample Output, we choose to use 4 ingredients in the pizza: cheese, mushrooms, tomatoes, and peppers.

- The first client likes the pizza because it contains both

cheeseandpeppers, which they like. - The second client does not like the pizza: it does not contain

basilwhich they like. - The third client likes the pizza because it contains

mushroomsandtomatoes, which they like, and does not containbasilwhich they do not like.

This means submission of this output would score 2 points for this case because two clients (the first and third ones) would like this pizza.

—

Past editions

— From https://codingcompetitions.withgoogle.com/hashcode/archive

Hash Code started in 2014 with just 200 participants from France. In 2020, more than 100,000 participants from across Europe, the Middle East, and Africa took part in the competition. You can take a look at the problems and winning teams from past editions of Hash Code below.

Past problem statements

Software engineering at scale

Hash Code 2021, Final Round

[download id=”10690″]

Google stores the vast majority of its code in one monolithic codebase, holding billions of lines of code. As of 2016, more than 25,000 software engineers were contributing to it, improving existing services and adding cool new features to meet the demands of their users.

Traffic Signaling

Hash Code 2021, Online Qualification Round

[download id=”6058″]

Given the description of a city plan and planned paths for all cars in that city, optimize the schedule of traffic lights to minimize the total amount of time spent in traffic and help as many cars as possible reach their destination before a given deadline.

Assembling smartphones

Hash Code 2020, Final Round

[download id=”5932″]

In this problem statement, we will explore the idea of operating an automated assembly line for smartphones.

Building a smartphone is a complex process that involves assembling numerous components, including the screen, multiple cameras, microphones, speakers, a processing unit, and a storage unit.

To automate the building of a smartphone, we will be using robotic arms that can move around the assembly workspace, performing all necessary tasks.

Book scanning

Hash Code 2020, Online Qualification Round

[download id=”5929″]

Books allow us to discover fantasy worlds and better understand the world we live in. They enable us to learn about everything from photography to compilers… and of course, a good book is a great way to relax!

Google Books is a project that embraces the value books bring to our daily lives. It aspires to bring the world’s books online and make them accessible to everyone. In the last 15 years, Google Books has collected digital copies of 40 million books in more than 400 languages, partly by scanning books from libraries and publishers all around the world.

In this competition problem, we will explore the challenges of setting up a scanning process for millions of books stored in libraries around the world and having them scanned at a scanning facility.

Compiling Google

Hash Code 2019, Final Round

[download id=”5927″]

Google has a large codebase, containing billions of lines of code across millions of source files. From these source files, many more compiled files are produced, and some compiled files are then used to produce further compiled files, and so on.

Given then huge number of files, compiling them on a single server would take a long time. To speed it up, Google distributes the compilation steps across multiple servers.

In this problem, we will explore how to effectively use multiple compilation servers to optimize compilation time.

Photo slideshow

Hash Code 2019, Online Qualification Round

[download id=”5925″]

As the saying goes, “a picture is worth a thousand words.” We agree – photos are an important part of contemporary digital and cultural life. Approximately 2.5 billion people around the world carry a camera – in the form of a smart phone – in their pocket every day. We tend to make good use of it, too, taking more photos than ever (back in 2017, Google Photos announced it was backing up more than 1.2 billion photos and videos per day).

The rise of digital photography creates an interesting challenge: what should we do with all of these photos? In this competition problem, we will explore the idea of composing a slideshow out of a photo collection.

City Plan

Hash Code 2018, Final Round

[download id=”5921″]

The population of the world is growing and becoming increasingly concentrated in cities. According to the World Bank, global urbanization (the percentage of the world’s population that lives in cities) crossed 50% in 2008 and reached 54% in 2016.

The growth of urban areas creates interesting architectural challenges. How can city planners make efficient use of urban space? How should residential needs be balanced with access to public utilities, such as schools and parks?

Self-driving rides

Hash Code 2018, Online Qualification Round

[download id=”5917″]

Millions of people commute by car every day; for example, to school or to their workplace.

Self-driving vehicles are an exciting development for transportation. They aim to make traveling by car safer and more available while also saving commuters time.

In this competition problem, we’ll be looking at how a fleet of self-driving vehicles can efficiently get commuters to their destinations in a simulated city.

Router placement

Hash Code 2017, Final Round

[download id=”4175″]

Who doesn’t love wireless Internet? Millions of people rely on it for productivity and fun in countless cafes, railway stations and public areas of all sorts. For many institutions, ensuring wireless Internet access is now almost as important a feature of building facilities as the access to water and electricity. Typically, buildings are connected to the Internet using a fiber backbone. In order to provide wireless Internet access, wireless routers are placed around the building and connected using fiber cables to the backbone. The larger and more complex the building, the harder it is to pick router locations and decide how to lay down the connecting cables.

Streaming videos

Hash Code 2017, Online Qualification Round

[download id=”4178″]

Have you ever wondered what happens behind the scenes when you watch a YouTube video? As more and more people watch online videos (and as the size of these videos increases), it is critical that video-serving infrastructure is optimized to handle requests reliably and quickly. This typically involves putting in place cache servers, which store copies of popular videos. When a user request for a particular video arrives, it can be handled by a cache server close to the user, rather than by a remote data center thousands of kilometers away. Given a description of cache servers, network endpoints and videos, along with predicted requests for individual videos, decide which videos to put in which cache server in order to minimize the average waiting time for all requests.

Schedule Satellite Operations

Hash Code 2016, Final Round

[download id= “2596”]A satellite equipped with a high-resolution camera can be an excellent source of geo imagery. While harder to deploy than a plane or a Street View car, a satellite — once launched — provides a continuous stream of fresh data. Terra Bella is a division within Google that deploys and manages high-resolution imaging satellites in order to capture rapidly-updated imagery and analyze them for commercial customers. With a growing constellation of satellites and a constant need for fresh imagery, distributing the work between the satellites is a major challenge. Given a set of imaging satellites and a list of image collections ordered by customers, schedule satellite operations so that the total value of delivered image collections is as high as possible.

Optimize Drone Deliveries

Hash Code 2016, Online Qualification Round

[download id=”2595″]

The Internet has profoundly changed the way we buy things, but the online shopping of today is likely not the end of that change; after each purchase we still need to wait multiple days for physical goods to be carried to our doorstep. Given a fleet of drones, a list of customer orders and availability of the individual products in warehouses, schedule the drone operations so that the orders are completed as soon as possible.

Route Loon Balloons

Hash Code 2015, Final Round

[download id=”2594″]

Project Loon aims to bring universal Internet access using a fleet of high altitude balloons equipped with LTE transmitters. Circulating around the world, Loon balloons deliver Internet access in areas that lack conventional means of Internet connectivity. Given the wind data at different altitudes, plan altitude adjustments for a fleet of balloons to provide Internet coverage to select locations.

Optimize a Data Center

Hash Code 2015, Online Qualification Round

[download id=”2593″]

For over ten years, Google has been building data centers of its own design, deploying thousands of machines in locations around the globe. In each of these of locations, batteries of servers are at work around the clock, running services we use every day, from Google Search and YouTube to the Judge System of Hash Code. Given a schema of a data center and a list of available servers, your task is to optimize the layout of the data center to maximize its availability.

Street View Routing

Hash Code 2014, Final Round

[download id=”2592″]

The Street View imagery available in Google Maps is captured using specialized vehicles called Street View cars. These cars carry multiple cameras capturing pictures as the car moves around a city. Capturing the imagery of a city poses an optimization problem: the fleet of cars is available for a limited amount of time and we want to cover as much of the city streets as possible.