Splitting a zip file (or any file) into smaller parts

In this post, we will explain the following commands:

zip Original.zip Original/split -b 5M -d Original.zip Parts.zip.cat Parts.zip* > Final.zipunzip Final.zip -d Final

These commands are commonly used in Linux/Unix systems and can be very helpful when working with large files or transferring files over a network.

Command 1: zip Original.zip Original/

The zip command is used to compress files and create a compressed archive. In this command, we are compressing the directory named Original and creating an archive named Original.zip. The -r option is used to recursively include all files and directories inside the Original directory in the archive.

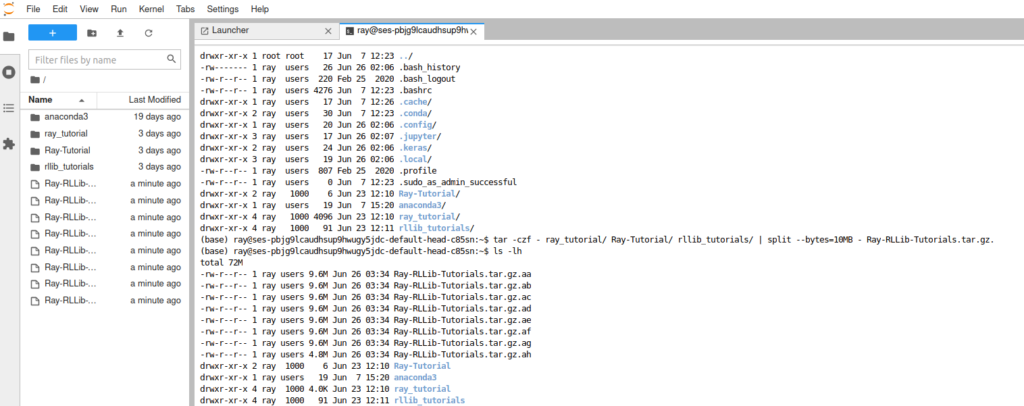

Command 2: split -b 5M -d Original.zip Parts.zip.

The split command is used to split a large file into smaller files. In this command, we are splitting the file Original.zip into smaller files with a size of 5 MB each. The -b option specifies the size of each split file, and the -d option is used to create numeric suffixes for the split files. The Parts.zip is the prefix for the split files.

Command 3: cat Parts.zip* > Final.zip

The cat command is used to concatenate files and print the output to the standard output. In this command, we are concatenating all the split files (which have the prefix Parts.zip) into a single file named Final.zip. The * is a wildcard character that matches any file with the specified prefix.

Command 4: unzip Final.zip -d Final

The unzip command is used to extract files from a compressed archive. In this command, we extract the files from the archive Final.zip and store them in a directory named Final. The -d option is used to specify the destination directory for the extracted files.

In conclusion, these commands can be beneficial when working with large files or transferring files over a network. By using the zip and split commands, we can compress and split large files into smaller ones, making them easier to transfer. Then, using the cat command, we can concatenate the split files into a single file. Finally, we can use the unzip command to extract the files from the compressed archive.