Automatic generation of phone background images using ImageMagick

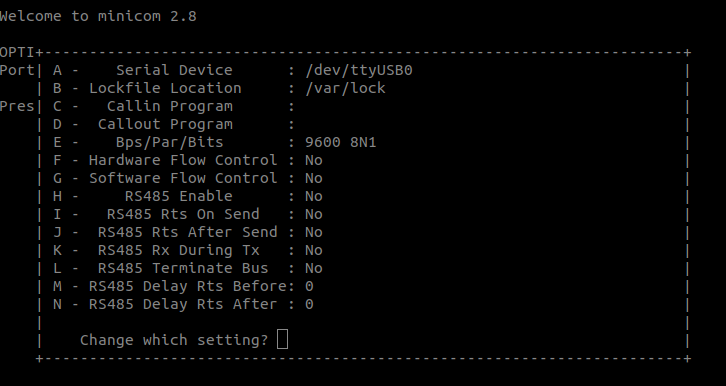

#!/bin/bash

#Once upon a time, there was a script that had a mission to create some wallpapers. First, it created a variable called "EXPORT_FOLDER" and assigned it the value "Wallpapers". Then, it made a new directory called "Wallpapers" where it would save the wallpapers it creates.

EXPORT_FOLDER="Wallpapers";

mkdir "$EXPORT_FOLDER";

#The script defined two variables, "FLOOR" and "CEILING" and assigned them the values of -180 and 180 respectively. It also created a variable "RANGE" which was the difference between CEILING and FLOOR + 1. Additionally, it created two variables "WALLPAPER_WIDTH" and "WALLPAPER_HEIGHT" and assigned them 1440 and 3040 respectively, which would be the size of the wallpapers it creates.

FLOOR=-180;

CEILING=180;

RANGE=$(($CEILING-$FLOOR+1));

WALLPAPER_WIDTH=1440;

WALLPAPER_HEIGHT=3040;

#The script then began its main task: a loop that would run 10 times. Within the loop, it would create a variable "RESULT" and assign it a random number using the $RANDOM variable. Then, it would use the modulo operator to calculate the remainder of dividing "RESULT" by "RANGE", and assigns the result back to "RESULT". Next, it would add "FLOOR" to "RESULT" and assigns it back to "RESULT".

for i in {1..10}

do

RESULT=$RANDOM;

let "RESULT %= $RANGE";

RESULT=$(($RESULT+$FLOOR));

#After all these calculations, the script uses the convert command from the ImageMagick suite to generate an image using the plasma:fractal with a blur of 0x2 and swirl of "RESULT" and shave 20x20 pixels from the edges. The generated image is saved to "$EXPORT_FOLDER/plasma_swirl_$i.jpg".

convert -size "$WALLPAPER_WIDTH"x"$WALLPAPER_HEIGHT" plasma:fractal -blur 0x2 -swirl $RESULT -shave 20x20 "$EXPORT_FOLDER/plasma_swirl_$i.jpg";

#Finally, the script used the convert command again to composite two other images "ByteFreaks.net_.png" and "cropped-ByteFreaks.net_.png" onto the plasma_swirl_$i.jpg and saves the result as "lock_$i.jpg" and "home_$i.jpg". And after 10 loops of all these steps, the script had successfully created 10 unique and interesting wallpapers, saving them all in the "Wallpapers" folder. The script was proud of its accomplishment and the wallpapers were enjoyed by many.

convert "$EXPORT_FOLDER/plasma_swirl_$i.jpg" "ByteFreaks.net_.png" -gravity southeast -geometry +333+1600 -composite "$EXPORT_FOLDER/lock_$i.jpg";

convert "$EXPORT_FOLDER/plasma_swirl_$i.jpg" "cropped-ByteFreaks.net_.png" -gravity southeast -geometry +0+0 -composite "$EXPORT_FOLDER/home_$i.jpg";

done

This script is written in Bash and it does the following:

- It creates a variable called “EXPORT_FOLDER” and assigns it the value “Wallpapers”.

- It creates a directory with the name of the variable “EXPORT_FOLDER” (i.e. “Wallpapers”).

- It creates two variables, “FLOOR” and “CEILING” and assigns them the values of -180 and 180 respectively. It also creates a variable “RANGE” which is the difference between CEILING and FLOOR + 1.

- It creates two variables “WALLPAPER_WIDTH” and “WALLPAPER_HEIGHT” and assigns them 1440 and 3040 respectively.

- It starts a loop that runs 10 times. Within the loop, it does the following:

- It creates a variable “RESULT” and assigns it a random number using the $RANDOM variable.

- It uses the modulo operator to calculate the remainder of dividing “RESULT” by “RANGE”, and assigns the result back to “RESULT”.

- It adds “FLOOR” to “RESULT” and assigns it back to “RESULT”

- It uses the convert command from the ImageMagick suite to generate an image using the plasma:fractal with a blur of 0x2 and swirl of “RESULT” and shave 20×20 pixels from the edges. The generated image is saved to “$EXPORT_FOLDER/plasma_swirl_$i.jpg”

- It then uses the convert command again to composite two other images “ByteFreaks.net_.png” and “cropped-ByteFreaks.net_.png” onto the plasma_swirl_$i.jpg and saves the result as “lock_$i.jpg” and “home_$i.jpg”

In short, this script creates 10 jpg images by applying a swirl effect on a fractal plasma image and compositing two other images onto it. These images are saved in the “Wallpapers” folder.